Poplarml - Deploy ML Models to Production

Poplarml Overview

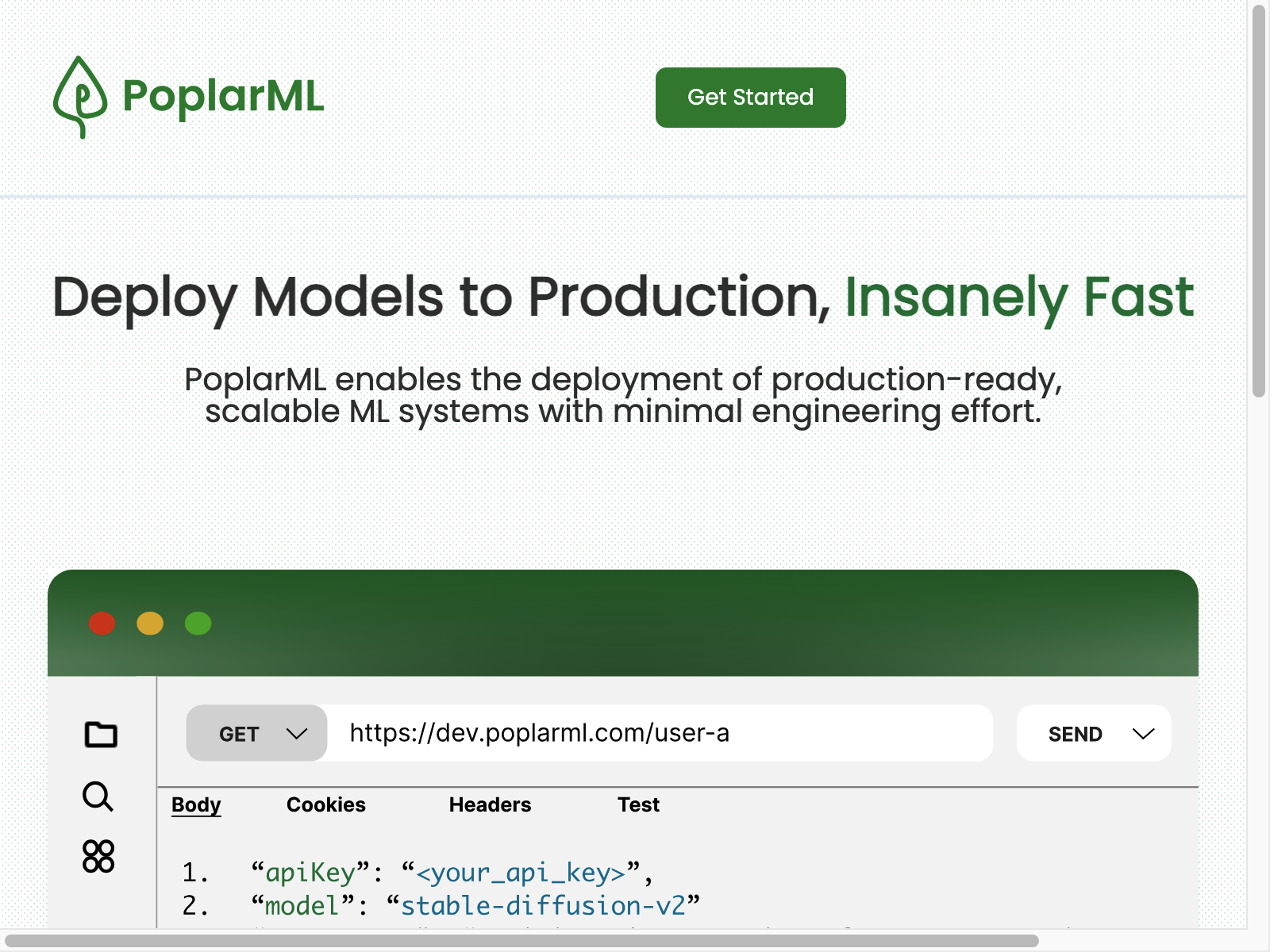

Poplarml is an AI tool that simplifies the deployment of machine learning models to production environments. It offers a seamless and efficient way to invoke your models through a REST API, enabling real-time inference on a fleet of GPUs.

Poplarml is designed to streamline the deployment process, allowing you to focus on model development rather than infrastructure management. With its user-friendly command-line interface (CLI) and intuitive features, Poplarml makes it easy to get your models up and running in no time.

Poplarml Key Features

-

Seamless Deployment: Poplarml's CLI tool allows you to deploy your ML models to a fleet of GPUs with ease, eliminating the need for complex infrastructure setup.

-

Real-time Inference: Invoke your models through a REST API and leverage the power of GPU-accelerated inference for real-time responses.

-

Scalability: Poplarml is designed to scale your deployment as your needs grow, ensuring your models can handle increasing workloads without interruption.

-

Monitoring and Logging: Gain insights into your model's performance with Poplarml's robust monitoring and logging capabilities, helping you identify and address any issues quickly.

-

Easy Integration: Poplarml seamlessly integrates with your existing workflows and tools, allowing you to incorporate it into your development pipeline without disruption.

Poplarml Use Cases

Poplarml is suitable for a wide range of machine learning use cases, including:

- Image Recognition: Deploy your computer vision models to classify, detect, or segment images in real-time.

- Natural Language Processing: Leverage Poplarml to deploy your language models for tasks like text generation, sentiment analysis, or named entity recognition.

- Predictive Analytics: Use Poplarml to deploy your predictive models for forecasting, anomaly detection, or recommendation systems.

Poplarml Pros and Cons

Pros:

- Streamlined deployment process with a user-friendly CLI

- Supports real-time inference with GPU-accelerated performance

- Scalable and reliable infrastructure for growing workloads

- Comprehensive monitoring and logging features

- Easy integration with existing workflows and tools

Cons:

- Limited customization options for advanced deployment scenarios

- Dependency on the Poplarml platform for infrastructure management

Poplarml Pricing

Poplarml offers a flexible pricing model to cater to the needs of different users:

| Plan | CPUs | GPUs | Inference Requests | Price (per month) |

|---|---|---|---|---|

| Starter | 2 | 1 | 100K | $99 |

| Professional | 8 | 2 | 500K | $499 |

| Enterprise | 16 | 4 | Unlimited | Custom Pricing |

Poplarml Alternatives

While Poplarml is a powerful AI tool for deploying ML models, there are a few alternatives worth considering:

- Kubeflow: An open-source platform for running machine learning workflows on Kubernetes, offering more customization options.

- TensorFlow Serving: A flexible, high-performance serving system for machine learning models, developed by Google.

- AWS SageMaker: Amazon's fully managed machine learning service, providing a comprehensive suite of tools for model deployment and management.

Poplarml FAQ

-

What programming languages does Poplarml support? Poplarml supports models built using popular machine learning frameworks like TensorFlow, PyTorch, and scikit-learn, allowing you to deploy models written in Python, Java, and other compatible languages.

-

Can Poplarml be used for real-time inference? Yes, Poplarml is designed for real-time inference, leveraging GPU acceleration to provide low-latency responses for your machine learning models.

-

How does Poplarml handle model updates and versioning? Poplarml makes it easy to manage model updates and versioning. You can seamlessly deploy new model versions to your fleet of GPUs, ensuring your production environment always uses the latest and most accurate models.

-

What level of support does Poplarml offer? Poplarml provides comprehensive support, including documentation, tutorials, and a responsive customer service team to help you with any questions or issues you may encounter.

To learn more about Poplarml and how it can streamline your machine learning deployment process, visit the official website at https://poplarml.com/ (opens in a new tab).