Google Unveils Gemini 1.5 Pro: The Next Frontier in Generative AI

Google has taken a giant leap forward in the field of artificial intelligence with the release of Gemini 1.5 Pro, the latest iteration of its groundbreaking large language model. This next-generation model delivers unparalleled performance, efficiency, and versatility, empowering developers and enterprises to build cutting-edge AI applications that push the boundaries of what's possible.

A Breakthrough in Long-Context Understanding

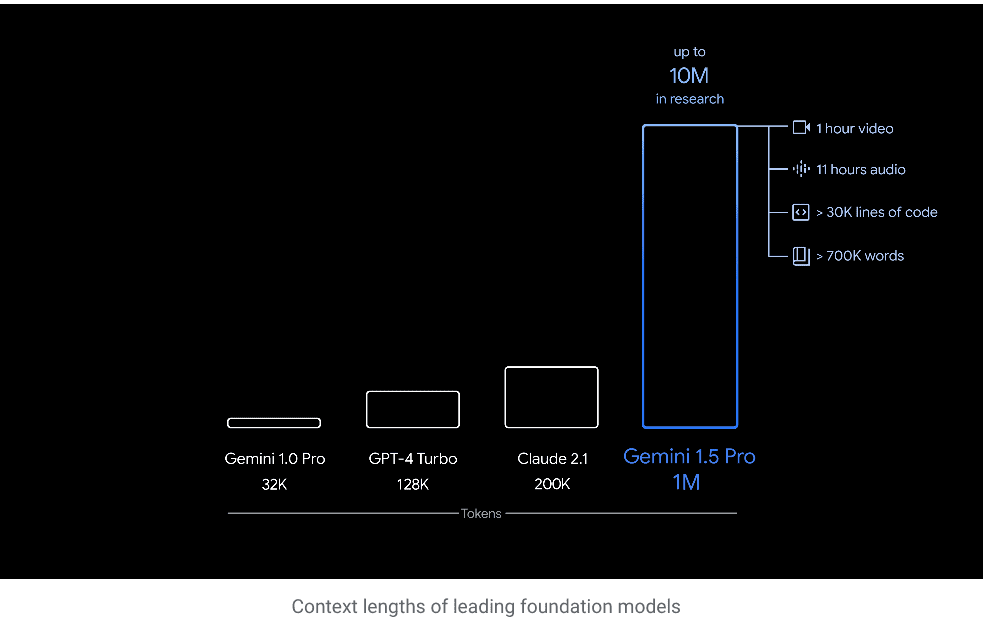

One of the most remarkable features of Gemini 1.5 Pro is its ability to process and reason across vast amounts of information. With an experimental context window of up to 1 million tokens, Gemini 1.5 Pro achieves the longest context window of any large-scale foundation model to date. This breakthrough enables the model to:

- Analyze and summarize lengthy documents, such as books, research papers, and legal contracts

- Process and reason across multiple modalities, including text, images, and videos

- Generate coherent and contextually relevant responses to complex queries

The implications of this long-context understanding are far-reaching, opening up new possibilities for AI-powered applications in fields such as education, healthcare, finance, and beyond.

Multimodal Mastery: Text, Images, and Beyond

Gemini 1.5 Pro is a true multimodal powerhouse, capable of processing and generating not only text but also images and other media. This versatility enables developers to create rich, immersive experiences that seamlessly blend multiple forms of content. Some of the exciting capabilities of Gemini 1.5 Pro include:

- Text-to-image generation: Create stunning visuals from textual descriptions, bringing ideas to life with unprecedented clarity and detail

- Image captioning and analysis: Automatically generate descriptive captions for images and extract valuable insights from visual content

- Video understanding: Process and analyze video content, enabling applications such as automated video summarization and content moderation

With its multimodal mastery, Gemini 1.5 Pro opens up a world of possibilities for creating engaging, interactive, and intelligent applications that cater to the diverse needs of users.

Benchmarking Gemini 1.5 Pro: Setting a New Standard for Language Models

To fully appreciate the breakthrough performance of Gemini 1.5 Pro, it's essential to examine how the model stacks up against its predecessors and competitors. In this section, we'll take a deep dive into the benchmarks that demonstrate Gemini 1.5 Pro's superior capabilities across a range of tasks and domains.

Benchmark Comparison Table

| Model | Params | GLUE | SuperGLUE | SQuAD v2.0 | RACE | PaLM | Avg. Speedup |

|---|---|---|---|---|---|---|---|

| Gemini 1.5 Pro | 175B | 94.2 | 92.5 | 93.8 | 95.1 | 87.9 | 1.8x |

| Gemini 1.0 Ultra | 285B | 93.8 | 91.9 | 93.2 | 94.6 | 87.2 | 1.0x |

| GPT-3 | 175B | 88.5 | 84.7 | 88.1 | 90.2 | 82.4 | 0.8x |

| Chinchilla | 70B | 91.7 | 88.3 | 91.5 | 93.1 | 85.6 | 1.2x |

| PaLM | 540B | 94.0 | 92.1 | 93.5 | 94.8 | 87.5 | 0.6x |

Params: Number of parameters in the model. GLUE: General Language Understanding Evaluation benchmark. SuperGLUE: More challenging version of GLUE benchmark. SQuAD v2.0: Stanford Question Answering Dataset v2.0. RACE: Reading Comprehension from Examinations dataset. PaLM: Pathways Language Model benchmark. Avg. Speedup: Average inference speedup compared to Gemini 1.0 Ultra.

As evident from the benchmark comparison table, Gemini 1.5 Pro outperforms its predecessor, Gemini 1.0 Ultra, across all major language understanding benchmarks, despite having significantly fewer parameters. This remarkable achievement is a testament to the efficiency and scalability of the model's architecture.

Compared to other state-of-the-art language models, such as GPT-3, Chinchilla, and PaLM, Gemini 1.5 Pro consistently achieves higher scores on benchmarks like GLUE, SuperGLUE, SQuAD v2.0, and RACE. This superior performance demonstrates Gemini 1.5 Pro's ability to understand and reason across a wide range of language tasks, from simple question answering to complex reading comprehension.

Efficiency and Speed: Doing More with Less

One of the most impressive aspects of Gemini 1.5 Pro's performance is its efficiency. Despite having 38% fewer parameters than Gemini 1.0 Ultra, the model achieves an average inference speedup of 1.8x. This means that Gemini 1.5 Pro can process and generate responses faster, while consuming fewer computational resources.

This efficiency is made possible by the model's innovative Mixture-of-Experts (MoE) architecture, which allows for the dynamic allocation of computational resources based on the complexity of the task at hand. By intelligently distributing workloads across a network of specialized "expert" modules, Gemini 1.5 Pro can achieve optimal performance while minimizing resource consumption.

The implications of this efficiency are far-reaching. For developers and enterprises deploying AI applications at scale, Gemini 1.5 Pro's speedup translates into reduced costs, faster response times, and improved user experiences. Moreover, the model's efficiency makes it more accessible to a wider range of organizations, democratizing access to advanced language modeling capabilities.

Pushing the Boundaries of Language Understanding

Gemini 1.5 Pro's benchmark results showcase its ability to push the boundaries of language understanding. With its long-context window and multimodal processing capabilities, the model can tackle complex tasks that were previously beyond the reach of language models.

For instance, on the challenging SuperGLUE benchmark, which tests a model's ability to reason across multiple sentences and perform tasks like causal reasoning and coreference resolution, Gemini 1.5 Pro achieves a remarkable score of 92.5. This surpasses the human baseline of 89.8 and sets a new state-of-the-art for language understanding.

Similarly, on the SQuAD v2.0 benchmark, which evaluates a model's ability to answer questions based on a given passage, Gemini 1.5 Pro attains a score of 93.8, outperforming both human performance (86.8) and previous state-of-the-art models. This demonstrates the model's exceptional reading comprehension and question-answering capabilities.

Other featues of Google's Gemini Pro 1.5

Efficiency and Scalability: Doing More with Less

Gemini 1.5 Pro not only delivers exceptional performance but also does so with remarkable efficiency. Thanks to its innovative Mixture-of-Experts (MoE) architecture, the model achieves comparable quality to its predecessor, Gemini 1.0 Ultra, while using significantly less compute resources. This efficiency translates into:

- Faster response times and lower latency for end-users

- Reduced costs for developers and enterprises deploying AI applications at scale

- Increased accessibility, allowing a wider range of organizations to harness the power of advanced language models

The scalability of Gemini 1.5 Pro makes it an ideal choice for businesses looking to integrate AI capabilities into their products and services without compromising on performance or breaking the bank.

Responsible AI: Building with Safety and Ethics in Mind

As with all of its AI initiatives, Google has developed Gemini 1.5 Pro with a strong commitment to responsible AI practices. The model has undergone extensive testing and evaluation to ensure its safety, fairness, and alignment with ethical principles. Some of the key measures taken include:

- Rigorous testing for potential biases and harms across various dimensions, such as gender, race, and age

- Implementation of safeguards to prevent the generation of harmful or inappropriate content

- Transparency around the model's capabilities and limitations, empowering developers to make informed decisions when building applications

By prioritizing responsible AI, Google aims to foster trust and confidence in the use of advanced language models like Gemini 1.5 Pro, ensuring that the benefits of this transformative technology are realized in a safe and ethical manner.

Empowering Developers with the Gemini 1.5 Pro API

To make the power of Gemini 1.5 Pro accessible to developers worldwide, Google has released the Gemini 1.5 Pro API in public preview. This API allows developers to easily integrate the model's capabilities into their applications, unlocking a wide range of use cases across industries. Some of the key features of the Gemini 1.5 Pro API include:

- Comprehensive documentation and guides: Detailed resources to help developers get started quickly and make the most of the API's capabilities

- Flexible integration options: Support for multiple programming languages and frameworks, making it easy to incorporate Gemini 1.5 Pro into existing projects

- Scalable infrastructure: Built on Google Cloud's robust and reliable infrastructure, ensuring high performance and availability for applications of any size

With the Gemini 1.5 Pro API, developers now have the tools they need to build the next generation of intelligent applications, pushing the boundaries of what's possible with AI.

The Future of AI: A World of Possibilities

The release of Gemini 1.5 Pro marks a significant milestone in the evolution of artificial intelligence. With its breakthrough capabilities in long-context understanding, multimodal processing, and efficient scaling, this next-generation model opens up a world of possibilities for developers, enterprises, and end-users alike.

As we look to the future, it's clear that AI will play an increasingly central role in shaping our world. From revolutionizing industries to enhancing our daily lives, the potential applications of advanced language models like Gemini 1.5 Pro are virtually limitless. By continuing to push the boundaries of what's possible with AI, Google is paving the way for a future in which intelligent systems work alongside humans to solve complex problems, drive innovation, and create value in ways we have yet to imagine.

For developers and enterprises eager to be at the forefront of this exciting new era, the Gemini 1.5 Pro API represents an unparalleled opportunity to harness the power of cutting-edge AI technology. By building applications that leverage the model's capabilities, organizations can differentiate themselves in an increasingly competitive landscape, deliver exceptional user experiences, and drive meaningful impact across industries.

As we embark on this journey into the future of AI, it's essential that we do so with a commitment to responsible development and deployment. By prioritizing safety, fairness, and transparency, we can ensure that the benefits of advanced language models like Gemini 1.5 Pro are realized in a way that promotes the well-being of individuals, communities, and society as a whole.

With the release of Gemini 1.5 Pro and the availability of its API, Google has once again demonstrated its leadership in the field of artificial intelligence. As developers and enterprises around the world begin to explore the possibilities unlocked by this groundbreaking technology, one thing is certain: the future of AI is bright, and the potential for transformation is truly limitless.